Writing bechmarks for your Go code is quite simple since all required features are already baked into the standard library. Very similar to writing tests where you use the *testing.T pointer you use *testing.B for benchmarks. However benchmarks are usually a snapshot of the current situation. They are done once when implementing an important function but they do not run during continuous integration (CI). As a new developer joining an existing team you don't know which code is critical for performance and you don't know if your changes have influence on those benchmarks. In this post we will show you how to set up benchmarks that run in your CI and how to monitor their values over time. So you cannot worsen those benchmarks by accident.

Let's take a look at our demo repository github.com/seriesci/bench with a very simple function.

// bench.go

package bench

import "time"

// Efficient is a super efficient function which needs a benchmark.

func Efficient(a, b int) int {

time.Sleep(500 * time.Nanosecond)

return a + b

}For this dummy function we would like to add a benchmark.

// bench_test.go

package bench

import "testing"

func BenchmarkEfficient(b *testing.B) {

for n := 0; n < b.N; n++ {

Efficient(1, 2)

}

}Now you are able to run the following command to see the benchmark on the command line.

$ go test -bench=.

goos: linux

goarch: amd64

BenchmarkEfficient-8 71128 20363 ns/opPASS

ok seriesci/bench 2.637sWe're only interested in the highlighted line and especially in the final value 20363 ns/op. We have to filter out this relevant information and send it to seriesci to continuously track the value. We're using grep to get the right line starting with BenchmarkEfficient... and awk to get the right column. The whole line consists of four columns where the value is the third one. That is where '{print $3}' is coming from.

$ go test -bench=. | grep BenchmarkEfficient | awk '{print $3}'

20363That's great. Now we simply take this value and post it to the seriesci API via curl. We're using xargs and its replstr feature to put the final value into the correct position. ${TOKEN} and ${CIRCLE_SHA1} are environment variables which are available during CI. ${TOKEN} has to be set manually whereas ${CIRCLE_SHA1} is automatically available.

$ go test -bench=. | grep BenchmarkEfficient | awk '{print $3}' | xargs -I {} curl \

--header "Authorization: Token ${TOKEN}" \

--data value="{}" \

--data sha="${CIRCLE_SHA1}" \

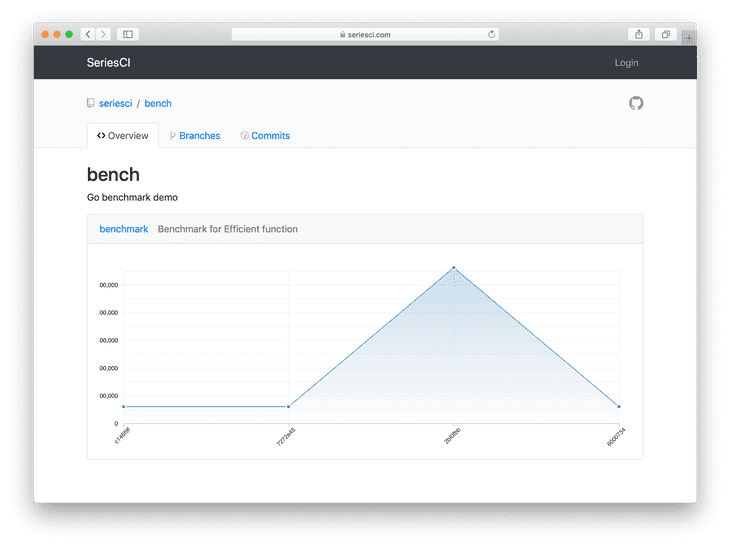

https://seriesci.com/api/repos/seriesci/bench/benchmark/valuesVoilà. We're done and it's that easy to integrate benchmarks into your Continuous Integration. From now on our Efficient function is benchmarked with every commit, pull request and code change. It will be impossible for anyone to introduce accidental changes which will degrade our benchmark.

Mirco Zeiss is the CEO and founder of seriesci.

Mirco Zeiss is the CEO and founder of seriesci.